No article found or not published for this site.

Recent Stories

Stories / Feb 5, 2026

Celebrating Black History Month 2026

Stories / Jan 27, 2026

Maryland Engineering Maintains Status as National Leader in...

Stories / Jan 16, 2026

Cholesterol Found to Play Key Role in Protecting the...

Stories / Jan 15, 2026

UMD Hosts 6th Annual MASBN Symposium

Stories / Jan 13, 2026

Sensor Advancement Breaks Barriers in Brain-Behavior Research

Stories / Dec 16, 2025

University of Maryland Represented at International Forum

Stories / Nov 21, 2025

Engineering at Maryland magazine solves for excellence

Stories / Nov 14, 2025

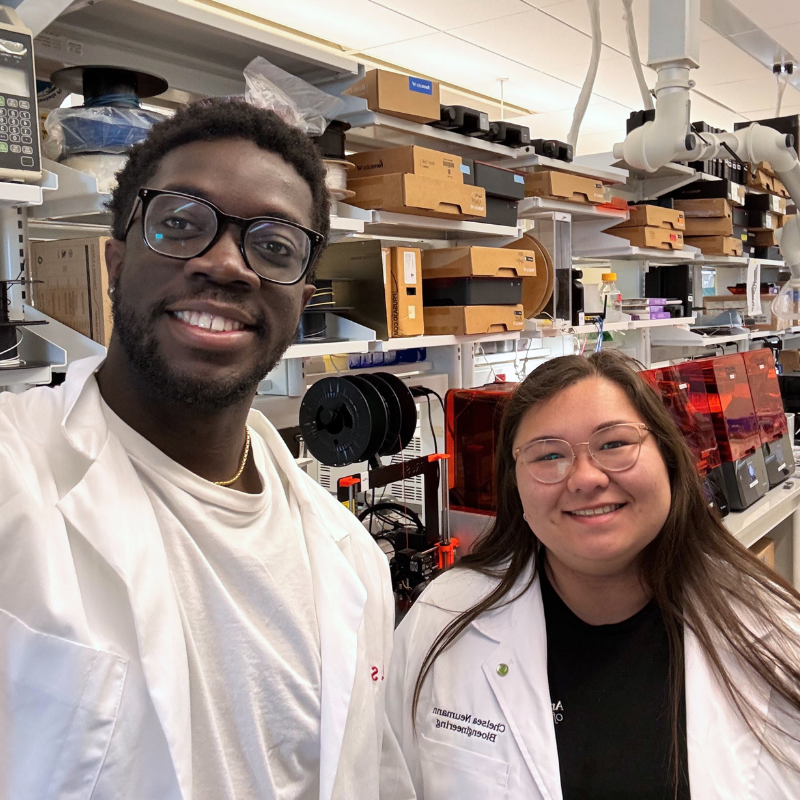

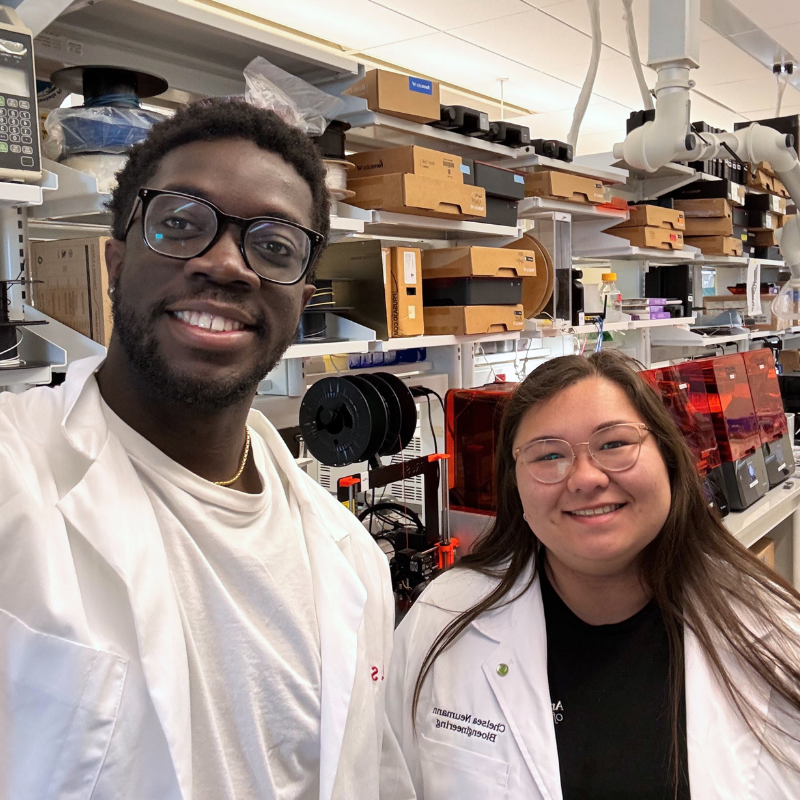

Fischell Institute Welcomes New MPower Fellows: Chelsea Neumann...

Stories / Nov 14, 2025

AIChE Recognizes Bentley and Jewell as Fellows

Stories / Nov 13, 2025

UMD Battery Study Addresses Key Barrier to Electrifying...